Dan Reed, COO, Reality Labs at META, joins James Kotecki in the CES C Space Studio

세계 최대 혁신 IT축제 CES 2025 C 스페이스 스튜디오 인터뷰에서 진행자 제임스 코테키(James Kotecki)는 메타(Meta)의 리얼리티 랩스(Reality Labs) COO인 댄 리드(Dan Reed)와 대화를 나눴다.

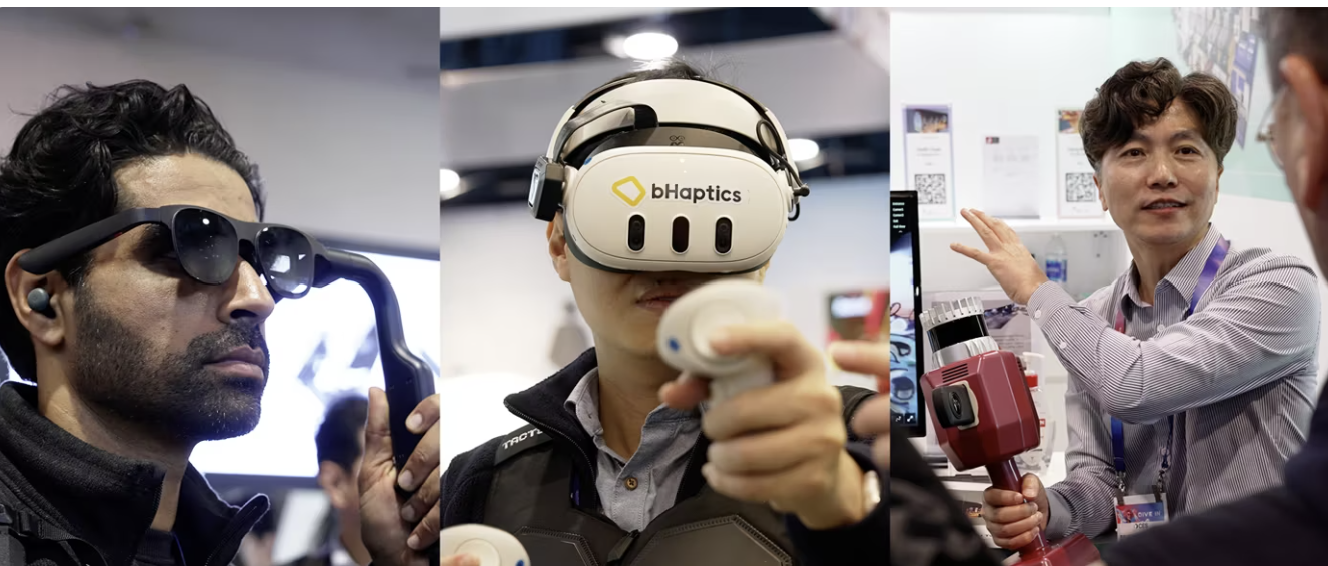

이들은 메타가 추구하는 증강현실(AR), 가상현실(VR), 인공지능(AI)의 통합 및 이러한 신기술이 미래 컴퓨팅 플랫폼에 어떤 변화를 가져올지에 대해 논의했다.

메타의 AR/VR 기술 발전, AI 기능의 통합, 그리고 사람들이 디지털 정보를 일상 생활에서 활용하는 방식에 대한 근본적인 변화를 추구하는 메타의 방향성이 강조됐다. 이러한 혁신들이 앞으로 개인의 삶, 사회적 상호작용, 접근성 분야에서 큰 영향을 미칠 것으로 전망된다.

[보고서]CES2025와 미디어, 엔터테인먼트 테크 V2

국내 유일에 CE2025와 엔터테크 보고서, AI가 할리우드에 침투하고 있는 현장에서부터 넷플릭스가 CES에서 개최한 광고주 설명회까지 정리

- 메타의 리얼리티 랩스 역할

- 리얼리티 랩스는 메타 내에서 AR, VR, 메타버스 기술을 개발하는 부서.

- 차세대 컴퓨팅 플랫폼이 ‘공간 컴퓨팅(spatial computing)’으로 발전할 것이며, 이를 통해 더욱 효과적이고 몰입감 있는 사용자 경험을 제공할 수 있다고 믿음

- VR에서 AR(메타버스)로의 진화

- VR은 주로 게이밍에서 시작했지만 점차 엔터테인먼트, 피트니스, 생산성 등 다양한 분야로 확장되고 있음

- AR은 스마트글라스(예: Ray-Ban Meta Smart Glasses)를 통해 대중에게 선보이고 있으며, 점차 풀 디스플레이 AR 글라스로 진화할 예정

- “메타버스”는 이러한 하드웨어 혁신을 연결해주는 소프트웨어·경험 레이어로 설명

- AI 통합: “AI 글래스”는 지능형 비서

- 리드(Reed)는 “AI 기능이 단순 카메라/오디오 기능을 지닌 기기를 지능형 비서 역할까지 수행하게 만들 것이라고 강조”

- 내장된 카메라, 스피커, 마이크를 통해 AI는 사용자의 주변 환경을 “보고”, “듣고”, 실시간대응할 수 있음

- 실시간 언어 번역, 주차 위치 기억, 이동 중 상황별 안내와 같은 발전된 기능들이 가능

![[리포트]글로벌 스트리밍 대전환과 FAST 시장의 부상](https://cdn.media.bluedot.so/bluedot.kentertechhub/2025/12/7jw8up_202512120304.png)

![[보고서]K-콘텐츠, 몰입형 공간 새로운 경험](https://cdn.media.bluedot.so/bluedot.kentertechhub/2025/12/je15hi_202512061434.png)

![[K콘텐츠와 K FAST]](https://cdn.media.bluedot.so/bluedot.kentertechhub/2025/11/zxwbgb_202511241038.jpg)

![[모집]1월 9일~14일 글로벌 AI 스템 캠프(자료집)](https://cdn.media.bluedot.so/bluedot.kentertechhub/2025/11/3kf0x5_202511031830.png)

![[MIPCOM2025]글로벌 엔터테인먼트 트렌드](https://cdn.media.bluedot.so/bluedot.kentertechhub/2025/10/duxlsp_202510170000.png)

![[보고서]생성AI와 애니메이션](https://cdn.media.bluedot.so/bluedot.kentertechhub/2025/09/c49fxu_202509271057.png)